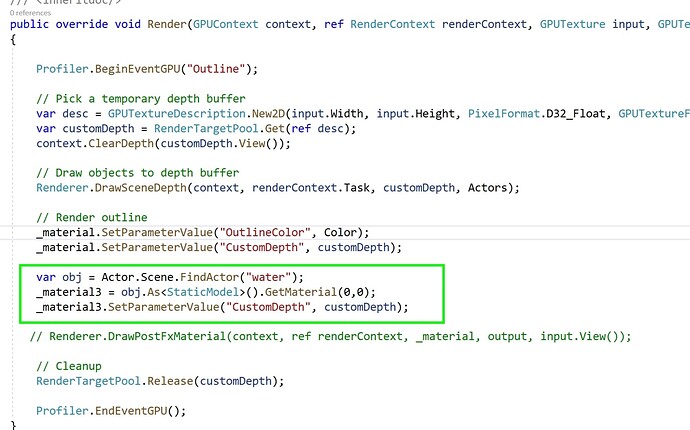

Thank you mafiesto4! Yes I was using “Post Process Effect”. Sorry, I’m a bit of a novice. I tried using “script” as in the link you gave instead of “Post Process Effect”. but I couldn’t understand how to initialize GPUContext and RenderContext. I tried it like this:

renderContext = new RenderContext();

context = new GPUContext();

.....

var desc = GPUTextureDescription.New2D(1465, 820, PixelFormat.D32_Float, GPUTextureFlags.DepthStencil | GPUTextureFlags.ShaderResource);

var customDepth = RenderTargetPool.Get(ref desc);

context.ClearDepth(customDepth.View());

// Draw objects to depth buffer

Renderer.DrawSceneDepth(context, renderContext.Task, customDepth, Actors);

.....

Then I am getting this error:

00:10:06.541 ]: [Error] Failed to spawn object of type 'FlaxEngine.GPUContext'.

[ 00:10:06.543 ]: [Warning] Exception has been thrown during . Failed to create native instance for object of type FlaxEngine.GPUContext (assembly: FlaxEngine.CSharp, Version=1.3.6228.0, Culture=neutral, PublicKeyToken=null).

Stack strace:

at FlaxEngine.Object..ctor () [0x00030] in F:\FlaxEngine\Source\Engine\Scripting\Object.cs:50

at FlaxEngine.GPUContext..ctor () [0x00000] in F:\FlaxEngine\Cache\Intermediate\FlaxEditor\Windows\x64\Development\Graphics\Graphics.Bindings.Gen.cs:1835

at OutlineRenderer.OnEnable () [0x0000c] in C:\Users\ElVahel\Documents\Flax Projects\Shadertest\Source\Game\pp.cs:79

at (wrapper native-to-managed) OutlineRenderer.OnEnable(OutlineRenderer,System.Exception&)

[ 00:10:06.543 ]: [Warning] Exception has been thrown during .

Failed to create native instance for object of type FlaxEngine.GPUContext (assembly: FlaxEngine.CSharp, Version=1.3.6228.0, Culture=neutral, PublicKeyToken=null)

I know this is a very newbie question/problem and I’m embarrassed to keep you busy with such questions. but even though I searched all night on the internet, and looked at the engine’s source code to maybe understand the method, I still could not succeed. Although the manual is very good, unfortunately the number of examples is very small.

I would appreciate it if you could guide me a little more on the subject.

Edit: I’ve also tried “OutlineRenderer : RenderTask” This time, I can’t define Render as a function.

error CS0505: 'OutlineRenderer.Render(GPUContext, ref RenderContext)': cannot override because 'RenderTask.Render' is not a function.