Thank you for the suggestions, unfortunately adding those lines still did not allow me to write to the depth buffer. I might be missing something basic, but I have been unable to figure it out.

My testing code is basically identical to the one in the custom geometry drawing code you linked to:

namespace Game;

using System;

using System.Runtime.InteropServices;

using FlaxEngine;

public class CustomGeometryDrawing : PostProcessEffect

{

/// <summary>

/// Shader constant buffer data structure that matches the HLSL source.

/// </summary>

[StructLayout(LayoutKind.Sequential)]

private struct Data

{

public Matrix WorldMatrix;

public Matrix ViewProjectionMatrix;

}

private static readonly Float3[] _vertices =

{

new Float3(0, 0, 0),

new Float3(100, 0, 0),

new Float3(100, 100, 0),

new Float3(0, 100, 0),

new Float3(0, 100, 100),

new Float3(100, 100, 100),

new Float3(100, 0, 100),

new Float3(0, 0, 100),

};

private static readonly uint[] _triangles =

{

0, 2, 1, // Face front

0, 3, 2,

2, 3, 4, // Face top

2, 4, 5,

1, 2, 5, // Face right

1, 5, 6,

0, 7, 4, // Face left

0, 4, 3,

5, 4, 7, // Face back

5, 7, 6,

0, 6, 7, // Face bottom

0, 1, 6

};

private GPUBuffer _vertexBuffer;

private GPUBuffer _indexBuffer;

private GPUPipelineState _psCustom;

private Shader _shader;

public Shader Shader

{

get => _shader;

set

{

if (_shader != value)

{

_shader = value;

ReleaseShader();

}

}

}

public override unsafe void OnEnable()

{

UseSingleTarget = true; // This postfx overdraws the input buffer without using output

Location = PostProcessEffectLocation.BeforeForwardPass; // Custom draw location in a pipeline

// Create vertex buffer for custom geometry drawing

_vertexBuffer = new GPUBuffer();

fixed (Float3* ptr = _vertices)

{

var desc = GPUBufferDescription.Vertex(sizeof(Float3), _vertices.Length, new IntPtr(ptr));

_vertexBuffer.Init(ref desc);

}

// Create index buffer for custom geometry drawing

_indexBuffer = new GPUBuffer();

fixed (uint* ptr = _triangles)

{

var desc = GPUBufferDescription.Index(sizeof(uint), _triangles.Length, new IntPtr(ptr));

_indexBuffer.Init(ref desc);

}

#if FLAX_EDITOR

// Register for asset reloading event and dispose resources that use shader

Content.AssetReloading += OnAssetReloading;

#endif

// Register postFx to all game views (including editor)

SceneRenderTask.AddGlobalCustomPostFx(this);

}

#if FLAX_EDITOR

private void OnAssetReloading(Asset asset)

{

// Shader will be hot-reloaded

if (asset == Shader)

ReleaseShader();

}

#endif

public override void OnDisable()

{

// Remember to unregister from events and release created resources (it's gamedev, not webdev)

SceneRenderTask.RemoveGlobalCustomPostFx(this);

#if FLAX_EDITOR

Content.AssetReloading -= OnAssetReloading;

#endif

ReleaseShader();

Destroy(ref _vertexBuffer);

Destroy(ref _indexBuffer);

}

private void ReleaseShader()

{

// Release resources using shader

Destroy(ref _psCustom);

}

public override bool CanRender()

{

return base.CanRender() && Shader && Shader.IsLoaded;

}

public override unsafe void Render(GPUContext context, ref RenderContext renderContext, GPUTexture input, GPUTexture output)

{

// Here we perform custom rendering on top of the in-build drawing

// Setup missing resources

if (!_psCustom)

{

_psCustom = new GPUPipelineState();

var desc = GPUPipelineState.Description.Default;

desc.VS = Shader.GPU.GetVS("VS_Custom");

desc.PS = Shader.GPU.GetPS("PS_Custom");

desc.DepthEnable = true;

desc.DepthWriteEnable = true;

desc.CullMode=CullMode.TwoSided;

// Enable blending for transparency

desc.BlendMode = BlendingMode.AlphaBlend;

_psCustom.Init(ref desc);

}

// Set constant buffer data (memory copy is used under the hood to copy raw data from CPU to GPU memory)

var cb = Shader.GPU.GetCB(0);

if (cb != IntPtr.Zero)

{

var data = new Data();

Matrix.Multiply(ref renderContext.View.View, ref renderContext.View.Projection, out var viewProjection);

Actor.GetLocalToWorldMatrix(out var world);

Matrix.Transpose(ref world, out data.WorldMatrix);

Matrix.Transpose(ref viewProjection, out data.ViewProjectionMatrix);

context.UpdateCB(cb, new IntPtr(&data));

}

// Draw geometry using custom Pixel Shader and Vertex Shader

context.BindCB(0, cb);

context.BindIB(_indexBuffer);

context.BindVB(new[] {_vertexBuffer});

context.SetState(_psCustom);

context.SetRenderTarget(renderContext.Buffers.DepthBuffer.View(), input.View());

context.DrawIndexed((uint)_triangles.Length);

}

}

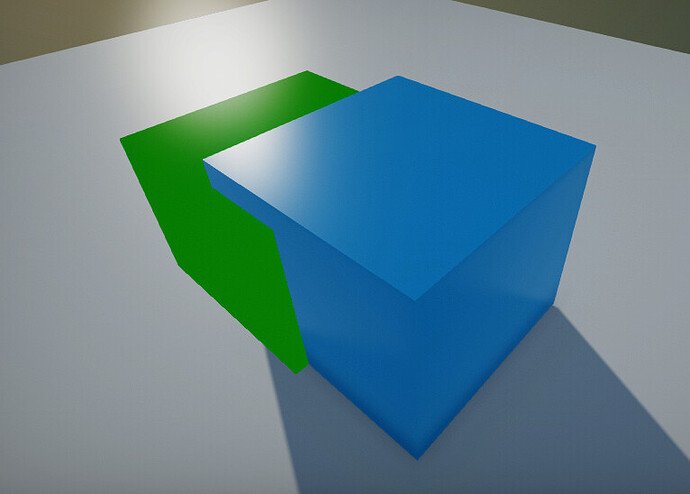

With a few minor changes as discussed above. Here is my super basic test shader, all it does is try to set o.depth to a constant. Essentially setting o.depth to 0 or 1 should make the object fully in front or behind everything else. But it is ignored, as you can see from the screenshot of the green cube above, where the green cube depth is written as normal no matter the value of o.depth.

#include "./Flax/Common.hlsl"

META_CB_BEGIN(0, Data)

float4x4 WorldMatrix;

float4x4 ViewProjectionMatrix;

META_CB_END

// Geometry data passed to the vertex shader

struct ModelInput

{

float3 Position : POSITION;

};

// Interpolants passed from the vertex shader

struct VertexOutput

{

float4 Position : SV_Position;

float3 WorldPosition : TEXCOORD0;

};

// Interpolants passed to the pixel shader

struct PixelInput

{

float4 Position : SV_Position;

float3 WorldPosition : TEXCOORD0;

};

struct PixelShaderOutput

{

float4 Color : SV_Target; // Existing color output

float Depth : SV_Depth; // Additional depth output

};

// Vertex shader function for custom geometry processing

META_VS(true, FEATURE_LEVEL_ES2)

META_VS_IN_ELEMENT(POSITION, 0, R32G32B32_FLOAT, 0, 0, PER_VERTEX, 0, true)

VertexOutput VS_Custom(ModelInput input)

{

VertexOutput output;

output.WorldPosition = mul(float4(input.Position.xyz, 1), WorldMatrix).xyz;

output.Position = mul(float4(output.WorldPosition.xyz, 1), ViewProjectionMatrix);

return output;

}

struct frag_out

{

float4 color : SV_Target0;

float depth : SV_Depth;

};

META_PS(true, FEATURE_LEVEL_ES3)

frag_out PS_Custom(PixelInput input) : SV_Target

{

frag_out o;

o.color=float4(0, 1, 0, 0.5);

o.depth=0.0; //Just for testing. No matter what value (0-1) I use, the result is the normal cube depth is written

return o;

}

I tried to play around with other settings in the shader, but unfortunately Flax crashed a lot when trying more exotic things so in the end I got stuck.

For the full context, I wrote a simple shader in Unity that illustrates what I am trying to achieve:

Shader "Unlit/TestShader"

{

Properties

{

}

SubShader

{

Tags { "Queue"="AlphaTest" }

Cull Front

Pass

{

Tags { "RenderType"="AlphaTest" }

ZWrite On

CGPROGRAM

#include "UnityCG.cginc"

#define PI 3.14159265358979323846

#pragma vertex vert

#pragma fragment frag

struct v2f

{

float4 pos : SV_POSITION;

float3 world_pos : TEXCOORD0;

float3 ray_origin : TEXCOORD2;

float3 ray_direction : TEXCOORD3;

};

struct frag_out

{

half4 color : SV_Target0;

float depth : SV_Depth;

};

struct ray

{

float3 origin;

float3 dir;

};

v2f vert(appdata_base v)

{

v2f o;

o.world_pos = mul(unity_ObjectToWorld, v.vertex).xyz;

o.pos = mul(unity_MatrixVP, float4(o.world_pos, 1));

o.ray_origin = mul(unity_WorldToObject, _WorldSpaceCameraPos);

o.ray_direction = v.vertex - o.ray_origin;

return o;

}

bool cast_ray(ray r, out float3 hit_pos, out float4 color)

{

float sphere_radius = 0.5;

float3 oc = r.origin;

float a = dot(r.dir, r.dir);

float b = 2.0 * dot(oc, r.dir);

float c = dot(oc, oc) - sphere_radius * sphere_radius;

float discriminant = b*b - 4*a*c;

if (discriminant > 0)

{

float temp = (-b - sqrt(discriminant)) / (2.0 * a);

if (temp < 0)

{

temp = (-b + sqrt(discriminant)) / (2.0 * a);

}

if (temp < 0)

{

return false;

}

hit_pos = r.origin + temp * r.dir;

float3 normal = hit_pos / sphere_radius;

color=float4(normal.x,normal.y,normal.z,1);

return true;

}

return false;

}

frag_out frag(v2f i)

{

frag_out o;

ray ray;

// These are initially in world space

ray.origin = _WorldSpaceCameraPos;

ray.dir = normalize(i.world_pos - ray.origin);

// Transform the ray into the object's local space

ray.origin = mul(unity_WorldToObject, float4(ray.origin, 1.0)).xyz;

ray.dir = mul(unity_WorldToObject, ray.dir);

ray.dir = normalize(ray.dir);

float3 hit_obj_pos;

float4 color;

if (cast_ray(ray, hit_obj_pos, color))

{

//Return from objects local space to world space

const float3 hit_world_pos = mul(unity_ObjectToWorld, float4(hit_obj_pos, 1.0)).xyz;

o.color = color;

const float4 clip_pos = UnityWorldToClipPos(hit_world_pos);

o.depth = clip_pos.z / clip_pos.w;

return o;

}

discard;

return o;

}

ENDCG

}

}

}

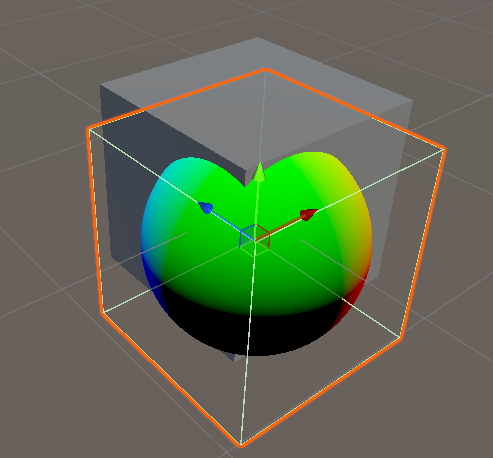

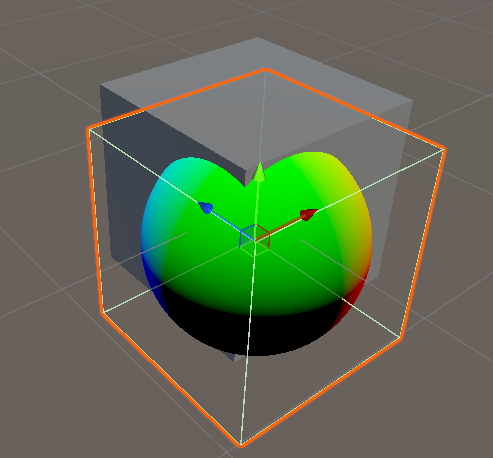

The idea is by using this technique we can add pathtraced/raymarched/raytraced objects and insert them into the scene like any other object.

This image shows the raytraced colored sphere interacting with a regular cube in the Unity editor. The sphere is in fact a cube mesh, with the raytracing-material on each face. But as you see, it does appear like a true sphere object since it modifies the depth in the fragment shader.

It is not a mainstream effect, but it is quite powerful as it enables raytracing effects on non-RTX hardware, in parts of the scene. The new game I am researching will be dependent on this mechanism.