Hello everyone,

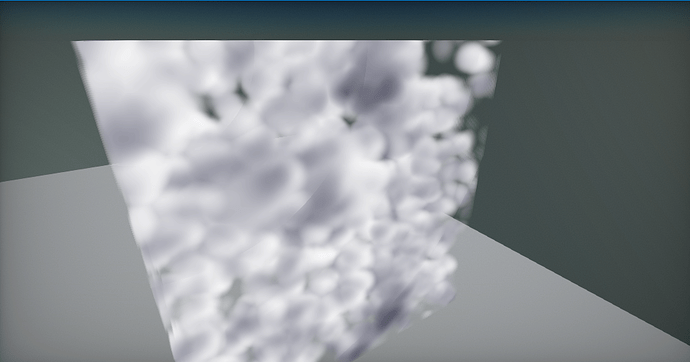

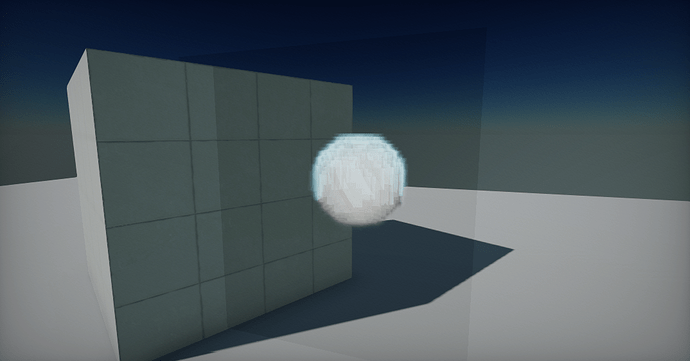

I dabbled in volume rendering a little bit and for the most part it is ‘relatively’ simple to make your standard 3d graphics pipeline on top of the existing one.

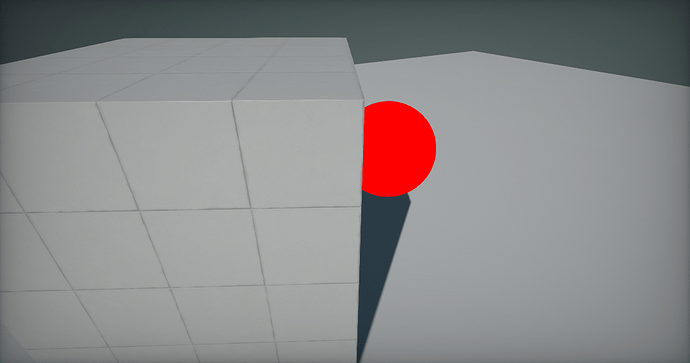

For now my test renderer have a underlying dataset to just visualize density points in space. But the depth-testing gives me real headaches.

I tried the standard opengl depth-testing. But something is off and I guess the clipspace to worldcoordinate calculations are not the way they should be.

Here the code in my pixel shader (I render the volume on the surface of a cube).

META_PS(true, FEATURE_LEVEL_ES2)

float4 PS_Custom(PixelInput input) : SV_Target

{

// Get depthbuffer value at point of model hull

float4 depthBufferValue = DepthBuffer.Sample(MeshTextureSampler, input.Position.xy);

// Adjust to clipspace range [-1,1]

float4 clipSpaceValue = float4(input.Position.xy * 2.0f - 1.0f, depthBufferValue.x * 2.0f - 1.0f, 1.0f);

// From clipspace to normalized world space

float4 homogenousPosition = mul(clipSpaceValue, ViewProjectionMatrixInverse);

// Calc real world position

float4 worldPosition = float4(homogenousPosition.xyz / homogenousPosition.w, 1);

// Translate to local position

float3 localPosition = mul(worldPosition, LocalMatrix).xyz;

float depthLength = length(localPosition - input.CameraLocal);

And this to set up the shader and the matrizes:

var data = new Data();

Matrix.Multiply(ref renderContext.View.View, ref renderContext.View.Projection, out var viewProjection);

Actor.GetLocalToWorldMatrix(out var world);

Matrix.Invert(ref viewProjection, out var viewProjectionInverse);

Matrix.Transpose(ref world, out data.WorldMatrix);

Matrix.Transpose(ref viewProjection, out data.ViewProjectionMatrix);

Matrix.Transpose(ref viewProjectionInverse, out data.ViewProjectionMatrixInverse);

Actor.GetWorldToLocalMatrix(out var local);

Matrix.Transpose(ref local, out data.LocalMatrix);

data.CameraPosition = Cam.Position;

context.BindSR(0, _gpuTextureBuffer);

context.BindSR(1, renderContext.Buffers.DepthBuffer);

Can someone help me with this?

)

)